Project Description

Soft actuators for kinesthetic interactions in virtual reality

Traditional game controllers rely on vibrotactile feedback as the only haptic sensation transmitted to the user. With the advent of consumer virtual reality platforms, users now have the ability to more deeply immerse themselves into videogames with visuals that match every head movement. The ability to transmit the action of the games into physical forces on the user, however, hasn’t improved much in the last two decades since the Nintendo Rumble Pak was introduced.

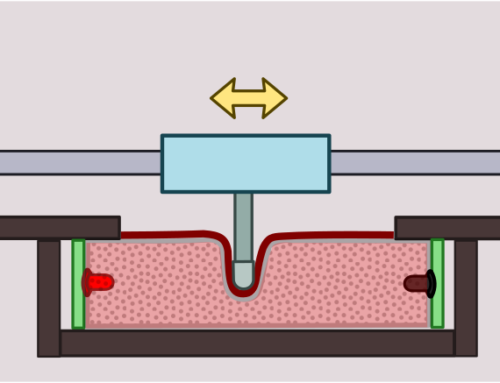

Fluidic elastomer actuators (FEAs) address this gap in technology by safely providing large forces with high spatial resolution. Comprised of soft rubbers, these FEAs have an intrinsic compliance that enables safe and natural human-computer interactions. Because they are processed in the liquid state, these actuators can be formed into nearly any shape and large arrays of actuators can be readily integrated in a single device.

For a first proof-of-concept, I created a sleeve with twelve FEAs that can fit over an HTC Vive controller. Each FEA is individually controlled with pressures up to 30 psi and can be used to dynamically impart pulses and sustained forces to the users hand when holding the controller.

Integration with NVIDIA’s VR Funhouse

To test the capabilities of this prototype, I worked with NVIDIA Research and the Organic Robotics Lab to combine the controller with NVIDIA’s VR Funhouse. In this game, the player is immersed in a virtual carnival where each minigame provides a different type of physical interaction (e.g., shooting revolvers, spraying goo, of boxing).

We created a different kinesthetic experience for each minigame; two of these are shown to the left. For the goo gun experience, each row of actuators is pulsed in sequence to simulate flow through the user’s hand. The amplitude and flow in proportional to the flow rate of the green goo shooting from the gun. Another example is a revolver experience that simulates recoil every time the trigger is pulled.

Demo at GPU Technology Conference (GTC 2017)

To get user feedback about this new technology and the experiences we created, we traveled to NVIDIA’s GPU Technology Conference from May 8-11, 2017 in San Jose. We were able to give live demos to ~200 attendees over the course of three days. A sampling of their reactions are included in the project overview video below.

Press

Acknowledgements

This work was supported by the National Science Foundation Graduate Research Fellowship (Grant No. DGE-1144153).